Dev-Diary 2 — Building an AI Agent at Netguru

June 02, 2025Dev-Diary #2 — Stocking the Toolbox

Every decent agent needs reliable senses before it starts “thinking.”

Here’s our starter kit — pragmatic, low-friction, already half-wired:

- Slack feed––getting recent messages and searching for them.

- Google Drive reader––walks folders, parses docs.

- Apollo API––pulls crisp company intel without juggling scrapers or captchas.

It is all done WITHOUT ANY VECTOR DATABASE. When Agent needs information, just runs the tool e.g. get_recent_slack_messages.

And it is working really well.

By default everybody is talking about putting everything into Vector Databases. It is often complicated setup as we want to integrate all datasources etc.

But:

- there is an API which gives you all you need,

- LLM can use tools and decide what data it needs,

- you don’t need to copy-paste-embed all data you have,

- you probably already have a system using API with your data.

It is much simpler to hook up the API, rather then create whole ETL process.

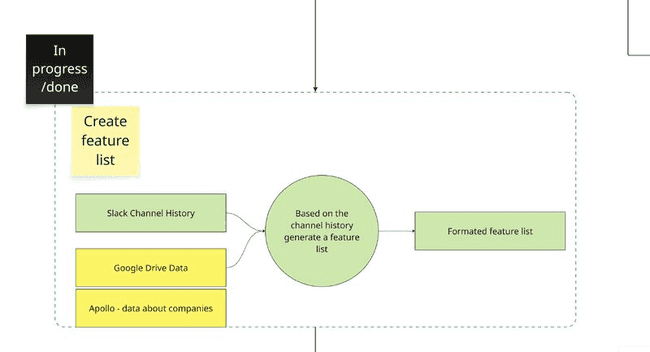

On the image is our blackbox. We just wired API to get necessary data, LLM is producing output based on our prompts. Later Agent can use it as a tool call. I think this is quite good example of KISS.